Virtual Immersive Training And Learning (VITAL)

- Our research explores the multi-faceted concepts relating to the use of Virtual and Immersive technology for training, learning, and therapy.

- We use these technologies to develop experimental paradigms that help us to explore research questions relating to sensorimotor control, perceptual cognitive expertise, skill learning, and health and well-being.

- We work across a range of academic disciplines including cognitive psychology, pedagogy, human factors, data science, and neuroscience. Our research is underpinned by psychological theories of human perception, cognition and emotion.

- We adopt a number of other measurements tools including eye tracking, motion capture and psychometric tests.

Our objectives

- To understand how immersive technology is used safely and effectively in applications to training and learning.

- To harness the power of immersive tools and techniques (including artifical intelligence and spatial computing) to understand how humans behave, perform, and learn in complex environments.

- To develop best practice in designing, developing and implementing these technologies in all of its societal applications (education, medicine, industry)

Current projects

More current and past research

Surgery

Surgery simulation and robotics

We performed a number of studies to explore the fidelity of surgical simulations for laparoscopic surgery. We tested the construct validity of a TURP simulator and also adopted eye tracking technology to validate the simulation against real-life operations.

With funding from Intuitive Surgical, we also explored the performance and cognitive benefits of the DaVinci surgical robot from the perspective of the surgeon, including learning, stress and workload.

We also investigated the role of observational learning, in the acquisition of robotic surgical skills.

Publications

- Face validity, construct validity and training benefits of a virtual reality TURP simulator.

- Assessing visual control during simulated and live operations: gathering evidence for the content validity of simulation using eye movement metrics.

- Robotic technology results in faster and more robust surgical skill acquisition than traditional laparoscopy.

- Robotically assisted laparoscopy benefits surgical performance under stress.

- Surgeons display reduced mental effort and workload while performing robotically assisted surgical tasks, when compared to conventional laparoscopy.

- Action observation for sensorimotor learning in surgery.

- A randomised trial of observational learning from 2D and 3D models in robotically assisted surgery.

360° video: the DaVinci surgical robot

Click and drag to move the camera

Falls

How fear of falling influences balance in older adults

The aim of this project is to evaluate psychological factors (e.g. anxiety about falling) that influence the control of balance and walking in older adults. As part of the project, older adults are asked to walk through a virtual environment, such as an uneven pavement.

We record how older people visually search their environment, how they generate recovery steps following external perturbations (experimentally simulating a slip/trip), and evaluate how these processes are influenced by anxiety and cognitive decline. This project is based at the Vsimulators facility.

Contact

For more information on this project, please contact Dr Will Young.

Parkinson's

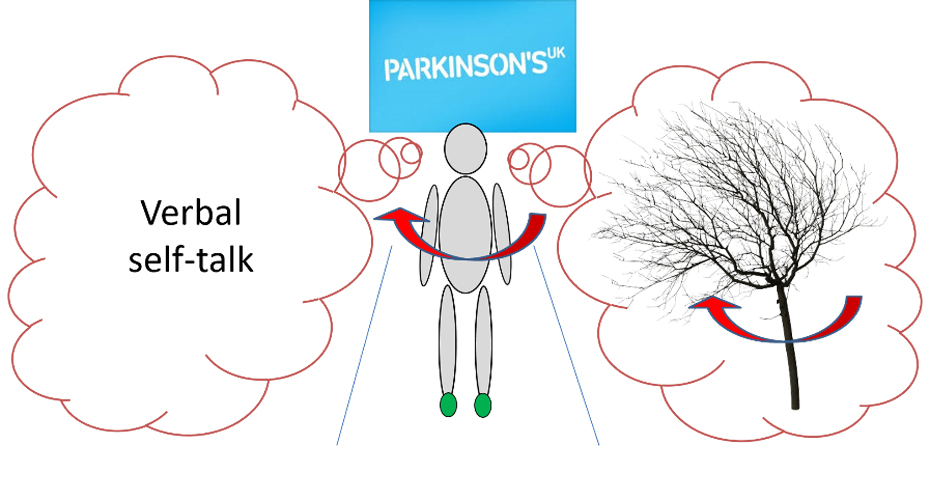

Developing low-cost cues to help people with Parkinson’s overcome Freezing of Gait

Freezing of Gait (FOG) is one of the most disabling features of advanced Parkinson’s. Often described as the sensation of one’s feet being ‘stuck’ to the ground, affected individuals often avoid leaving their homes for fear of falling or embarrassment.

As freezing is exacerbated by anxiety, sports psychologists are well-placed to design strategies that might help people allocate attention in more efficient ways to avoid freezing or initiate a step from a freeze during anxiety-provoking situations.

This project involves the development and evaluation of a range of strategies that might help people to overcome freezing, not only in laboratory settings, but in daily life both inside and outside their homes.

Testing VR

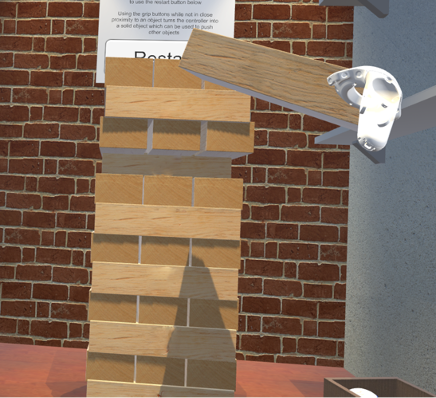

Methods for design, development and testing of virtual environments

Virtual reality (VR) provides the potential for immersive and engaging training solutions for experimentation and training. However, if VR training is to be adopted and used in an effective and evidence-based fashion, more rigorous ways to test the validity of the simulation is required.

Construct validity is the degree to which the simulation provides an accurate representation of core features of the task. In the context of sport, if the training drills in the VR environment are a true representation of the skills needed in the real world, then those that excel at the sport in the real world should also excel in the virtual one. The same is true in a range of industries that are attempting to adopt VR for learning and training.

This line of work is developing methodologies and ideas surrounding the testing and validation of virtual reality simulations.

Downloads

Taxonomy of fidelity and validity and successful transfer of learning from VR

Correlation matrix (with Pearson correlation coefficients) between SIM-TLX subscales and overall workload measure

If you require these downloads in a different format, please contact digitalteam@exeter.ac.uk

Publications

- Development and validation of a simulation workload measure: the simulation task load index (SIM-TLX)

- A Framework for the Testing and Validation of Simulated Environments in Experimentation and Training

- Testing the construct validity of a soccer-specific virtual reality simulator using novice, academy, and professional soccer players

Contact

For more information on this project, please contact Dr Sam Vine or Dr David Harris.

Driving

Driving simulation

We have previously used simulated rally driving to explore the link between eye movements and steering movements and test theories about the disruptive effects of anxiety on attention.

Additionally, simulated driving is an ideal environment in which to explore the psychophysiological determinants of flow, a peak performance state of intense concentration and motivation (see publications).

Publications

- Prevention of coordinated eye movements and steering impairs driving performance

- The role of effort in moderating the anxiety – performance relationship: Testing the prediction of processing efficiency theory in simulated rally driving

- Is Flow Really Effortless? The Complex Role of Effortful Attention

- An external focus of attention promotes flow experience during simulated driving

Aviation

With funding from the Higher Education Funding Council for England (HEFCE) we worked with Exeter-based airline Flybe to use flight simulators in the assessment of a pilot’s reaction to pressure.

Publication

More

Military simulation research

We delivered a research project, funded by the Defence Science and Technology Laboratory, which utilised a weapon simulation (see image).

The research explored the use of Quiet eye training to improve marksmanship skills in simulated environments. Read a publication about this work: Quiet Eye Training Improves Small Arms Maritime Marksmanship

Modulating multisensory information to explore pain

A PhD project examining how VR technologies can be used to alleviate chronic and acute and wrist pain, with obvious applied applications but a unique opportunity to understand the psychological underpinnings and fundamental drivers of pain.

Mixed reality learning: robots and dinosaurs

A £5.6m project (£4M from Innovate UK and £1.6M from the private sector) to develop a new mixed and virtual reality educational experience. The funding comes from the Audience of the Future Demonstrator fund (Industry Strategy Challenge Fund). Exeter received ~£100k to tackle research questions relating to immersive technology for education and creative experience. The lead organisation is FACTORY 42, and other partners include The Natural History Museum, The Science Museum, The Almeida theatre company, Magic Leap, and Sky VR.

Developing evidence-based methods of validation

VR simulations are often adopted within training programmes before they have been rigorously tested. Recently we have been developing a methodology for testing and validating virtual environments; see: Testing the fidelity and validity of a virtual reality golf putting simulator and Development and validation of a simulation workload measure: The Simulation Task Load Index (SIM-TLX), for more information.

Evaluating motion capture technology in virtual-reality

Many commercially-produced VR devices offer integrated motion tracking with a quality head-mounted display, which makes it a potentially valuable tool for researchers. To assess the suitability of these systems for scientific research (particularly in sport science and biomechanics), we are comparing the position/orientation estimation of the HTC Vive with that of an industrial standard motion capture system during dynamic movement tasks. This research forms an important first step in the PhD project of Jack Evans, who is undertaking an EPSRC-funded project to integrate Virtual Reality into the care and rehabilitation of stroke patients (find out more about this project here).

.jpg)

.jpg)

.jpg)